Problem

My previous research in understanding acute care resuscitation environments identified four major problems for clinical learners and teams. The first, that clinicians had trouble communicating in "code," or resuscitation scenarios. Two, current cognitive aids can be difficult to interpret and manage in code scnearios, particularly for learners. This usually contributes to problems in information retrieval and following the current state of a code. Three, there is a large influx of people entering into scenes, and it becomes difficult for individuals to manage and delegate tasks to team members in a cohesive way. Finally, fourth, EMRs are terrible as a documentation medium for these settings. This resulted in clinicians documenting on paper towels! This is not how healthcare environments should be operating, especially one that involves the care of children.

But what other alternatives do clinicians have? They struggle with trying to manage an environment, provide care to patients, and operate in a way that they believe is the best path for patients. The environment might not be conducive to the varying roles they must operate in, but at least the tools they use could be better. Perhaps we can create documentation tools to support the clinical recorder (the documenter), or we can create tools to support the team that can facilitate better communication.

We can't always fix every problem we identify in a healthcare environment. At least not in one fell swoop, and definitely not with one solution. Instead, my research started with one, and moved from there. Working with clinicians, I decided to focus my efforts on:

- Determining how I could address how clinical teams communicate in these environments.

- Determine if I could improve information retrieval challenges of current cognitive aids.

Current Cognitive Aids for Resuscitation

I won't try to break down the complexities that go into resuscitation, because there are many; and it differs from adults to children. The American Heart Association (AHA) provides guidelines for how adult resuscitation is handled, and it's a complex beast. Generally, adults are handled using processes known as Basic Life Support (BLS), and Advanced Cardiac Life Support (ACLS), and there are cognitive aids for both. Even so, because of the many factors and weight variations of adults, there are many situational variances that must be taken into account. Thus, it's hard for one cognitive aid to contain all of the information an adult patient needs.

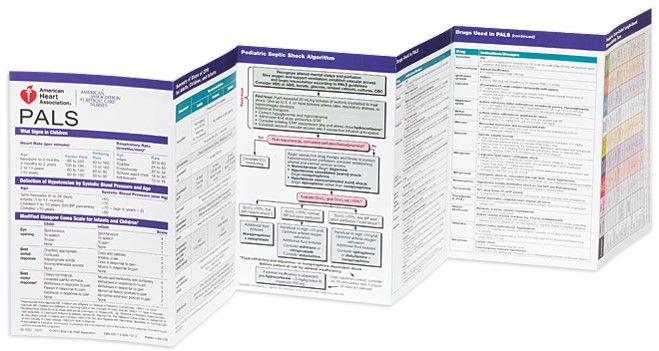

Children, however, are a different story. Children are very resilient, and weight between them is much easier to assume within a certain age range. Similarly, AHA produces guidelines for how to manage children in these resuscitation scenarios, called Pediatric Advanced Life Support (PALS). Because children have much lower chances of having complicated or varying systems, most information can be captured in one cognitive aid to be carried around. However, this cognitive aid, a PALS card, is 6 pages, front and back!

Children, however, are a different story. Children are very resilient, and weight between them is much easier to assume within a certain age range. Similarly, AHA produces guidelines for how to manage children in these resuscitation scenarios, called Pediatric Advanced Life Support (PALS). Because children have much lower chances of having complicated or varying systems, most information can be captured in one cognitive aid to be carried around. However, this cognitive aid, a PALS card, is 6 pages, front and back!

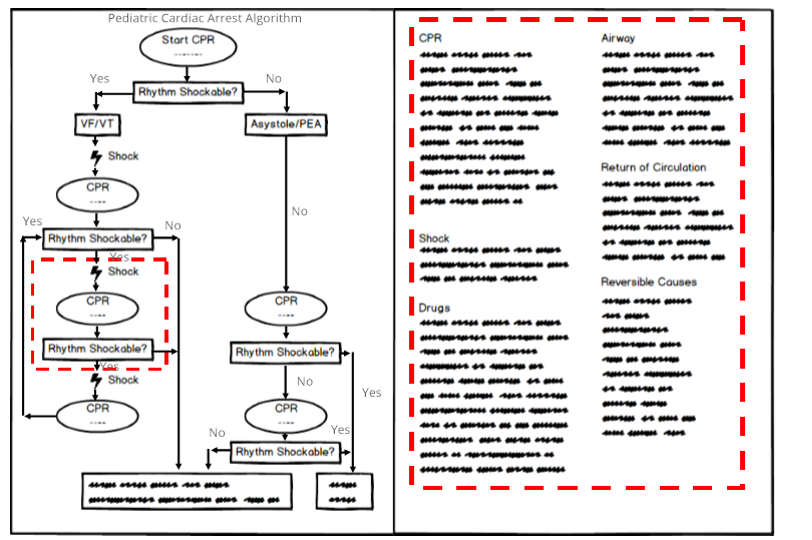

A visualization of one PALS algorithm is shown to the right for Pediatric Cardiac Arrest, and handles multiple situations based on a patient's heart condition. Note the red highlighted areas show how separation of detail from the visualization is currently handled. While the information contained here looks sequential, it's not; and that's what contributes to issues in this space. I couldn't design a new cognitive aid without first understanding the situations clinicians are in, so I sat in simulations and PALS classes with an undergraduate researcher of mine in a local regional hospital to get a better understanding.

A visualization of one PALS algorithm is shown to the right for Pediatric Cardiac Arrest, and handles multiple situations based on a patient's heart condition. Note the red highlighted areas show how separation of detail from the visualization is currently handled. While the information contained here looks sequential, it's not; and that's what contributes to issues in this space. I couldn't design a new cognitive aid without first understanding the situations clinicians are in, so I sat in simulations and PALS classes with an undergraduate researcher of mine in a local regional hospital to get a better understanding.

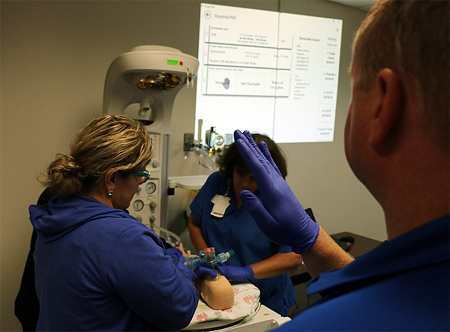

In observing these simulations, we found clinical learners (here, a learner can be an experienced person as well), have lots of trouble communicating jobs to various team members because it's hard to decipher what to do when, and who is doing what at any given time. In addition, as new members entered a scene, they were asking other folks in the middle of jobs to tell them what they should be doing to help. This mirrored some of the feedback we got in our previous ethnographic study.

Using this information, we worked with clinical educators at three major hospitals to understand their perspectives. What were the major problems they were seeing frequently? What happened in class most often? What happened in the real world?

Displays

Resuscitation, particularly for children, can be rare occurrences depending on the location, and it's such a sudden thing that its near impossible to be able to be observe. But educators and experienced clinicians and nurses had seen them often enough to hit the main points.

"Some people just freeze up!"

"New folks just don't know where to find information on their PALS cards."

"They just stare at their cards and don't do anything. I can't have people like that in the room..."

This led us to exploring how one should actually design for these situations. What would get people's heads up? How could we refocus them on the scene? How can we improve how new people entering a scene operate? Clinicians had many suggestions, some of which we thought were interesting. Some clinicians thought a tablet would be appealing, because it could help the team leader focus on steps they should remember - kind of like checklists. Others thought this can contribute to the same issue of keeping heads down.

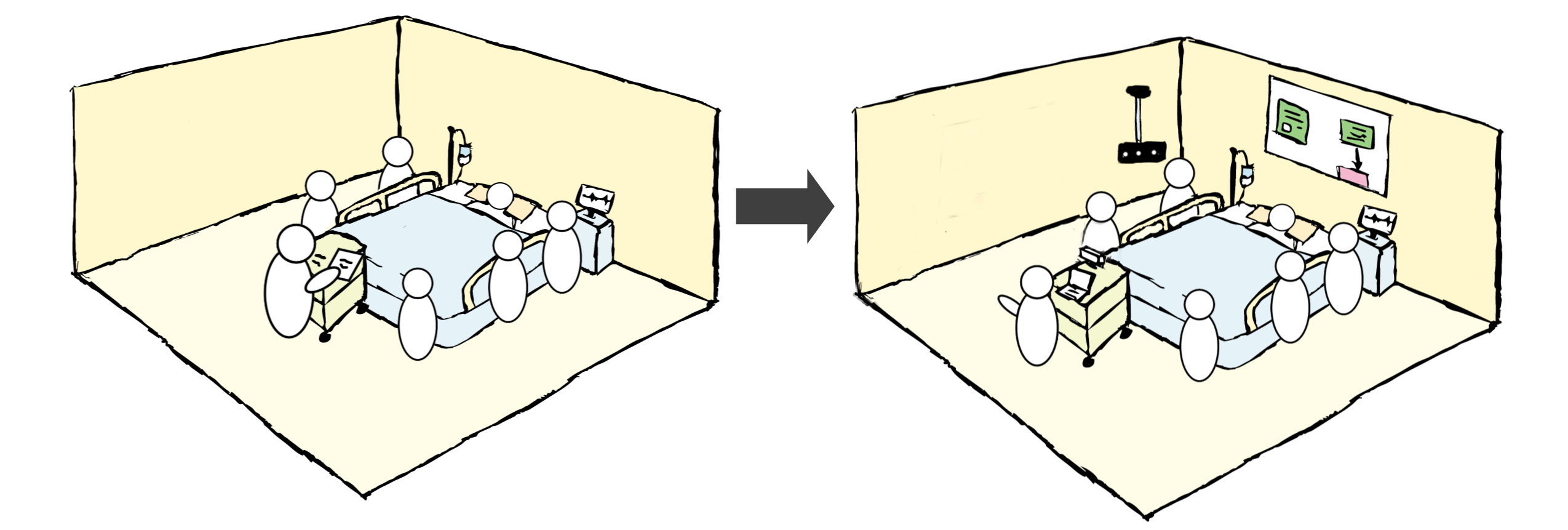

Instead, I approached the problem from a multimodal perspective. Could one provide a way for the team to share information? Can interaction be compartmentalized in different forms to keep heads up? While rooms in the ICU can be small and don't have room for additional monitors, it didn't mean something like projectors or patient TVs were out of the question. I opted for this route as a means to get some early feedback with a prototype.

Interaction Modalities

Educators wanted to explore new ways for learners to interact with a shared display system without resorting to having their heads down during code situations. This is a difficult problem to solve, even just for exploration. Options include voice, head mounted displays (at the time, usually controlled via voice), and gesture-based interaction methods. Considering noise was already a challenge in these settings, so I couldn't pursue that option. But that left gesture on the table, and a gesture-based approach could allow us a plug and play option later to utilize touch-based interaction.

At the time, gesture-based interaction was just taking off with hardware like the Kinect and Leap Motion using infrared technology. This was an approach the clinicians I worked with liked, but I knew there would be challenges along the way. To mitigate risks, I determined that incorporating both could be one way to help mitigate risk, though the interaction design would be one main challenge.

UI Design

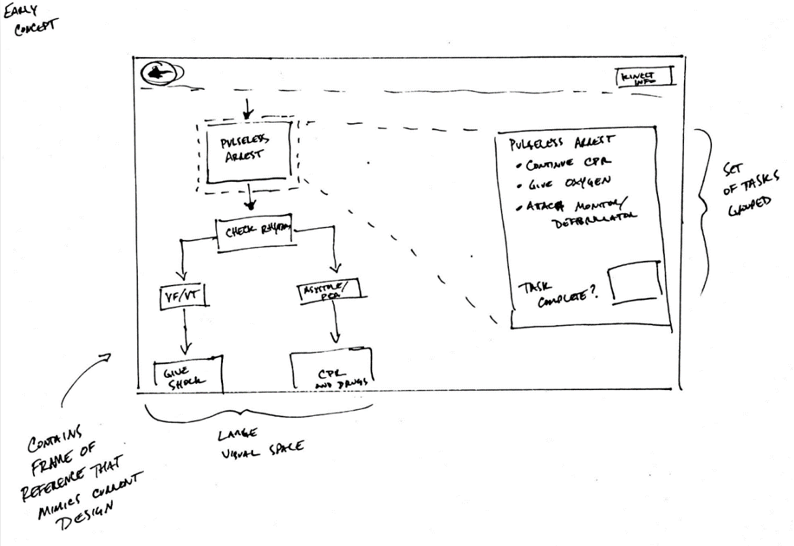

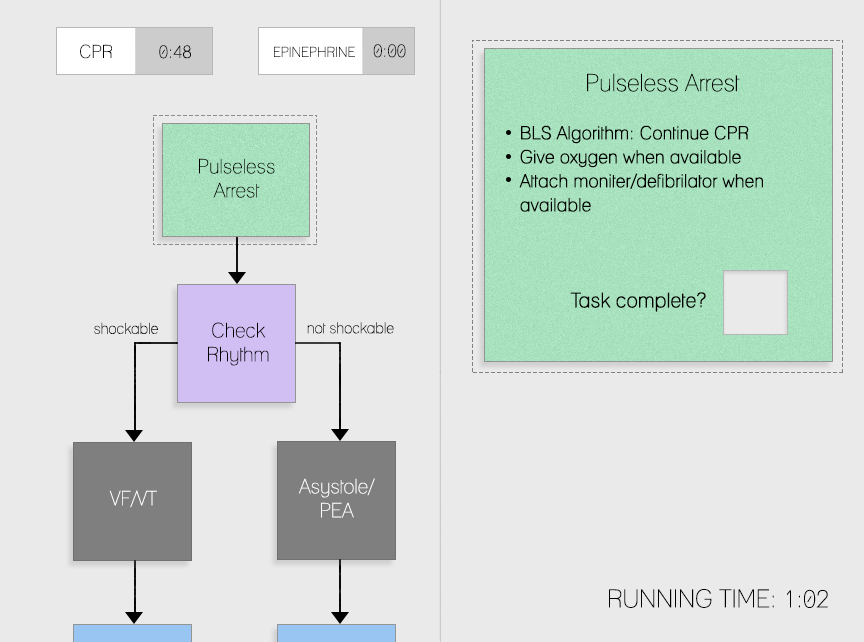

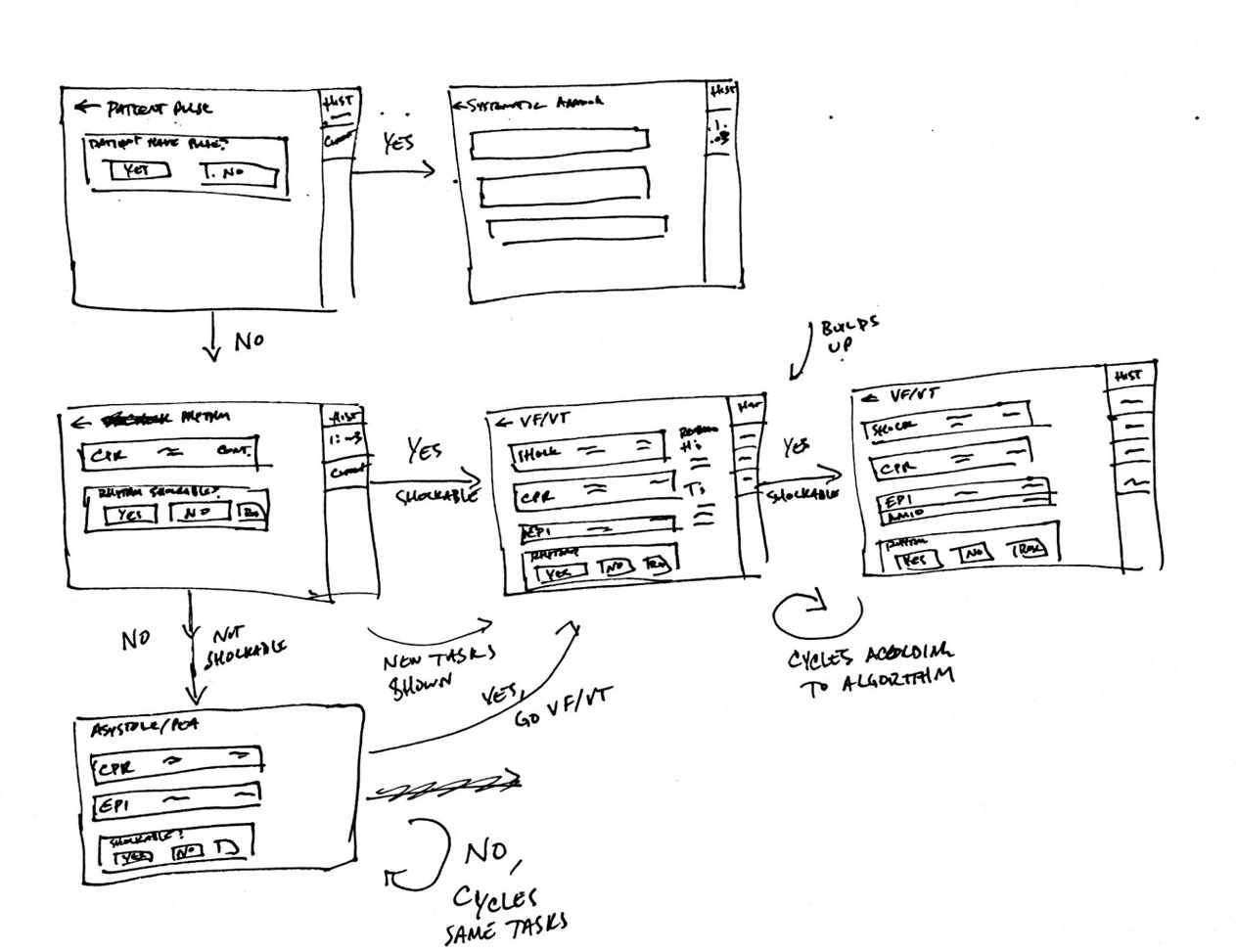

My team and I worked closely with clinicians as we went through our UI design process. This included sketching out UIs that closely represented the current PALS cards, and others that diverged pretty drastically. Working with clinicians, we proceeded through cognitive walkthroughs to evaluate our designs using wireframes and clickable prototypes.

We created rapid prototypes and evaluated some of these as well with our clinical collaborators.

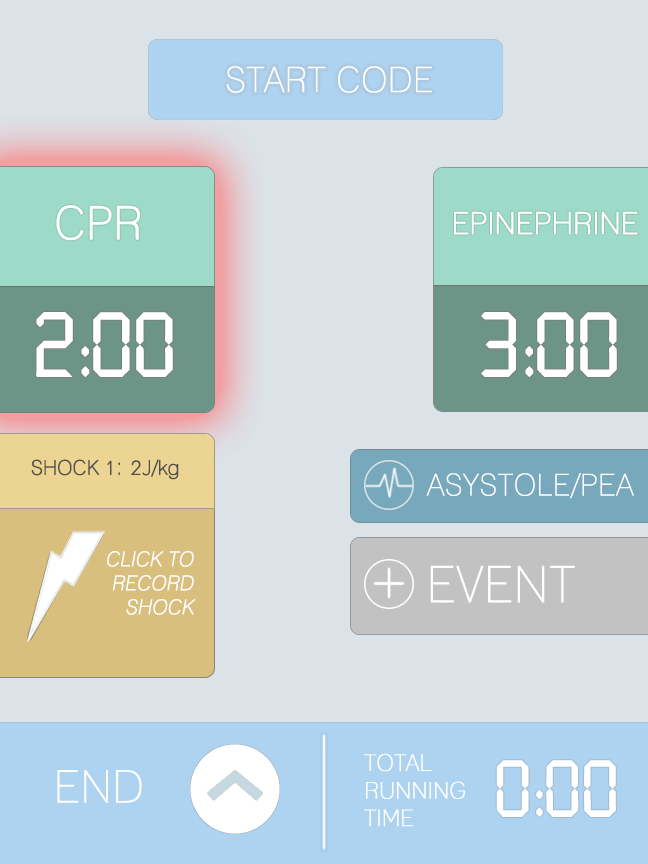

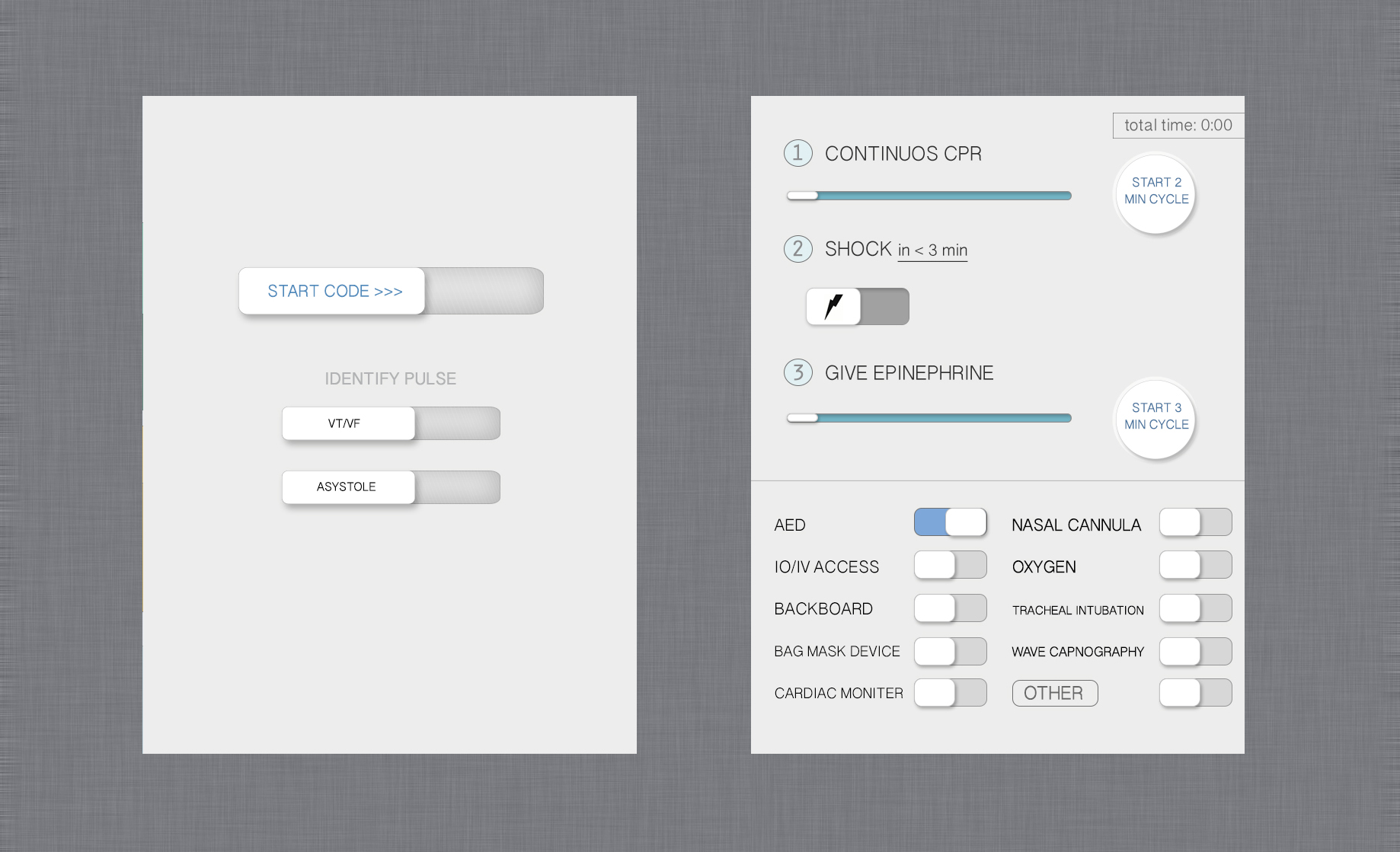

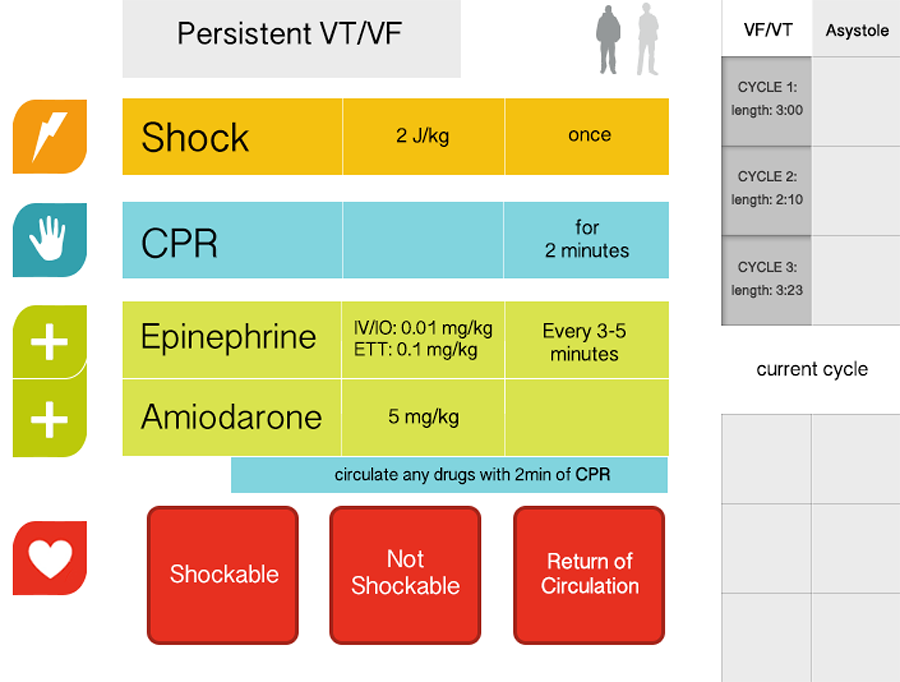

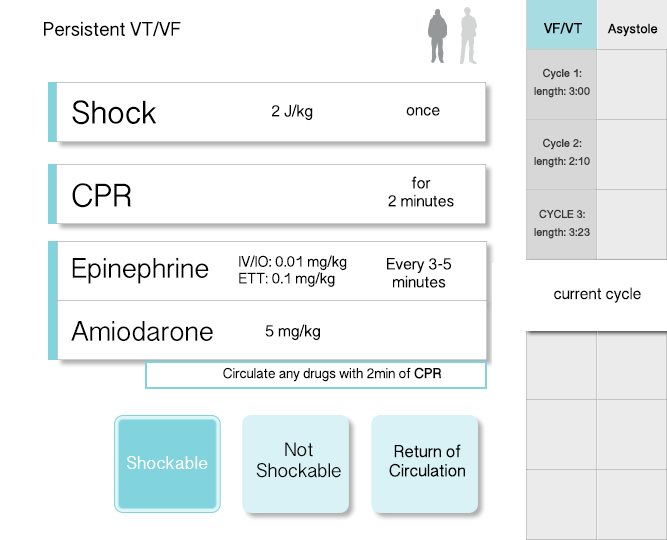

We also iterated through touch-based interfaces to explore how information might be architected differently.

We also went through many that were designed for a tablet interface.

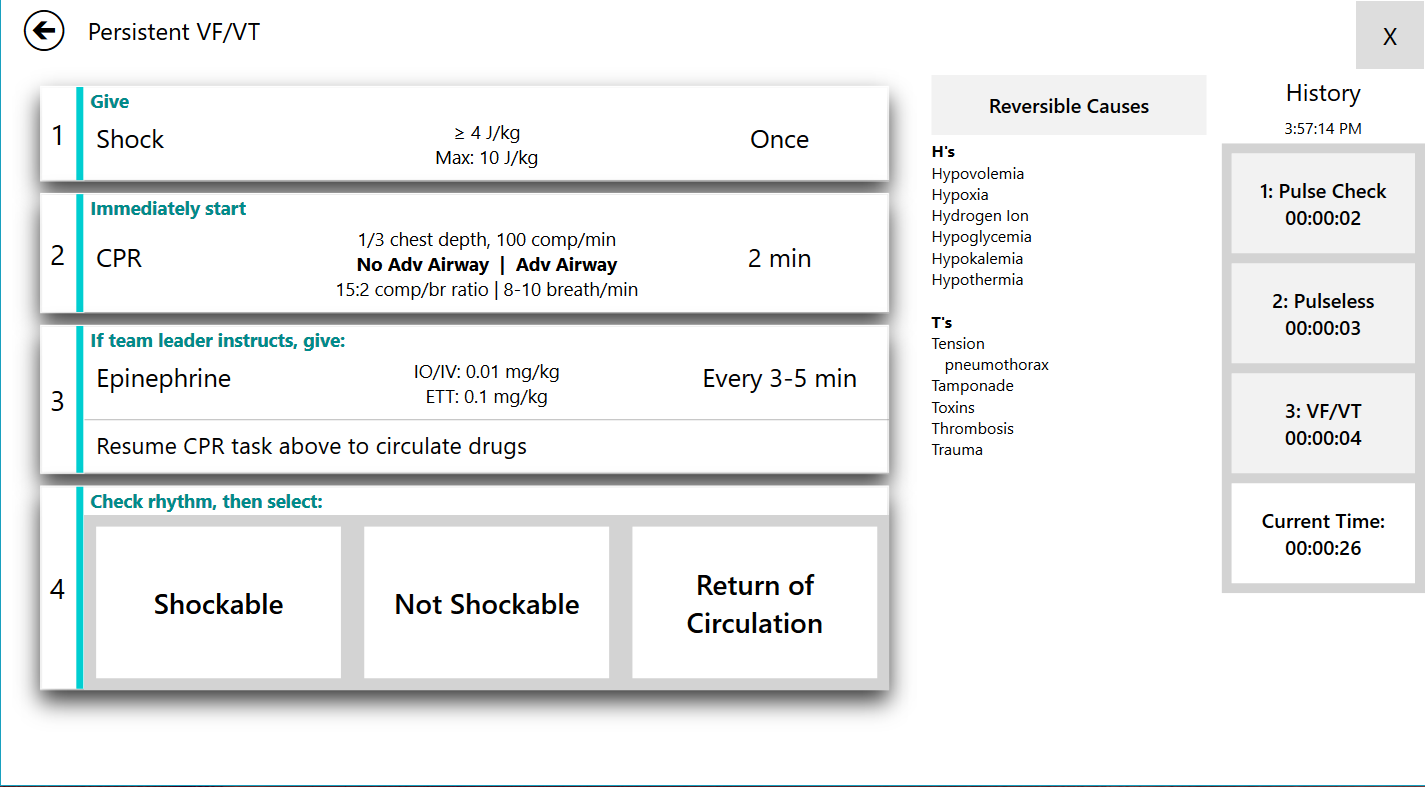

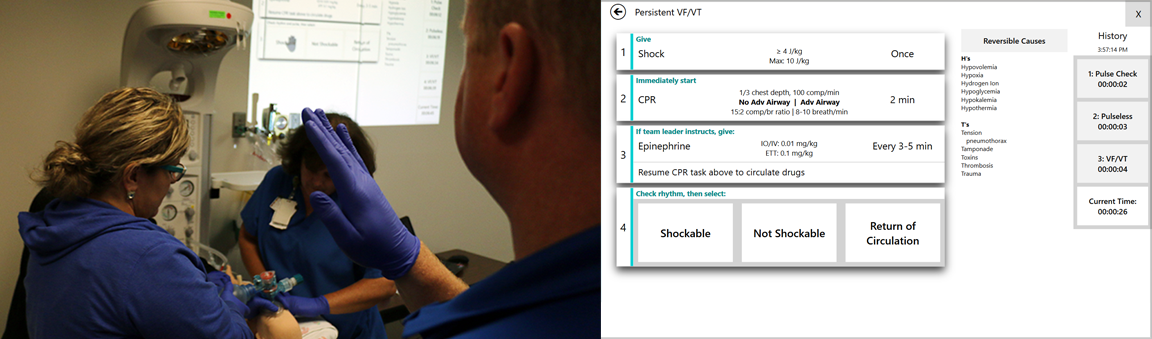

Eventually, we landed on an entirely new concept that brought together the information clinicians needs from disparate pages, onto one main page that was relevant only to the given situation. Obvious is obvious Mike, right? But its very difficult for clinicians to describe the various ways in which that information fits together, especially in these situations.

As for some aesthetic choices, we tried to design our interface to be used between touch and gesture-based interaction with the Kinect. While we used the Kinect's default gestures for scrolling and button presses, while incorporated individual selection by restricting who could use the Kinect based on an initial raised-hand.

I know what you're thinking though - What about people being in the way, and is something like calibration required? Thankfully, using Microsoft's SDK didn't impose the calibration constraint, and clinicians wanted cameras positioned high in the rooms to prevent distractors for the camera. This worked well for the environments we'd be testing in.

Evaluation

Along with clinicians, I led the evaluation of our technology in two hospitals across the US - one in the central midwest, and the second in the northeastern United States. We aso came up with a creative name for the tool - Visual TASK (Team Awareness and Shared Knowledge). Results from our first exploration included an evaluation of the Kinect as an interaction medium, while also evaluating information retrieval. This paper is published here. As suspected, for the time, using such a technology was just too early. The Kinect has trouble maintaining a skeleton of an individual as they move about the scene, and it's an unneeded distraction. It doesn't make sense to replace one distraction for another, and so we got rid of the Kinect.

Since then, we have evaluated the touch-based interaction approach using a tablet and share projected display for the team. This evaluation occurred in-situ with 14 clinicians. The results from this research is promising, and is currently under review for publication.