Problem

Currently, there are few tools that exist for clinical learners to train to recognize pain. They tend to look something like the image to the right. Even current patient mannequins are unable to convey such expressions, and thus, providers may misinterpret the level of pain felt by a patient.

Currently, there are few tools that exist for clinical learners to train to recognize pain. They tend to look something like the image to the right. Even current patient mannequins are unable to convey such expressions, and thus, providers may misinterpret the level of pain felt by a patient.

One's ability to recognize pain in patients is a critical aspect for effective healthcare delivery, and it's something that while taught in medical training, could be improved.

In it's current state, a chart is used often in various healthcare contexts to identify an individuals' pain, or self-report methods are used to ask patients how they are feeling at any given point.

Approach

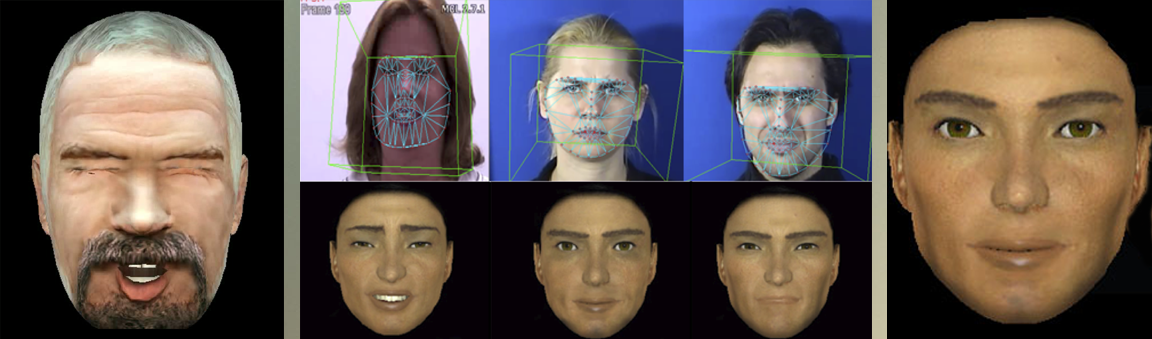

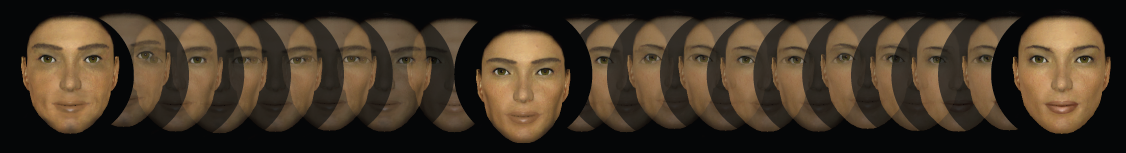

To address this problem, a colleague and I developed a tool to help synthesize pain on virtual avatars, and later anthropomorphic robot heads using computer vision technology. Using the video game Half-Life 2, I extracted the video game character model for Alex. From there, I used Adobe Photoshop to create 20 different avatars that altered the model's perceived gender. We weren't sure which could be classified as which, so we used Amazon's Mechanical Turk to label each model's gender, and statistically measured each to ground our dataset.

To address this problem, a colleague and I developed a tool to help synthesize pain on virtual avatars, and later anthropomorphic robot heads using computer vision technology. Using the video game Half-Life 2, I extracted the video game character model for Alex. From there, I used Adobe Photoshop to create 20 different avatars that altered the model's perceived gender. We weren't sure which could be classified as which, so we used Amazon's Mechanical Turk to label each model's gender, and statistically measured each to ground our dataset.

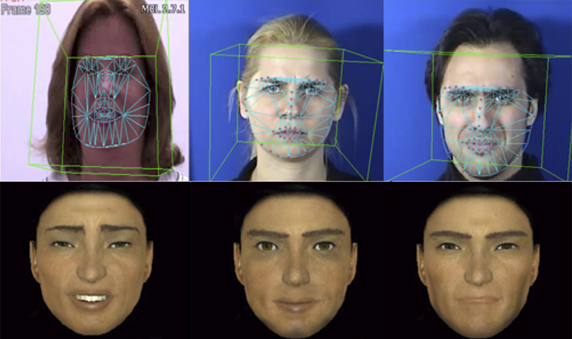

Mapping

Before we could use these avatars, we had to find a dataset we could actually map the avatars to, where people were expressing pain naturally. We found both the McMaster Pain Archive, and the MMI database, which included acted facial expressions of anger and disgust, which are often conflated with pain. We utilized constrained-local models on these datasets to extract the featuresets and landmarks of the face over time. We then took the "most" male, female, and gender neutral avatars, and then mapped them to video game models using Steam's Source SDK. Mappings were coordinated in alignment with the Facial Action Coding System's Action Units, which is commonly used for measuring facial expressions based on observable movement.

We then grounded our data, and then conducted a study to measure if individuals could identify the videos of avatars expressing pain, and then had users measure the pain rating of the avatar. These were compared to the ratings used by the McMaster Pain Archive.

Overall, we found that individuals yielded higher pain labeling accuracies compared to manually animated faces. A deeper dive into our results can be found in our paper.